AI is in all places proper now. ChatGPT, Gemini, Perplexity, you identify it. These instruments are coming quick and altering how we work, assume, and doc. And let’s be sincere… It’s tempting to make use of them for every thing.

And positive, it looks like we’ve entered a brand new period. However right here’s the catch: whereas the tech has taken off, the foundations haven’t modified a lot.

USC researchers just lately discovered that many medical doctors utilizing ChatGPT have been unknowingly violating HIPAA, typically by pasting in what felt like “anonymized” affected person knowledge, however with out stripping all 18 identifiers. And a latest JAMA research confirmed that when ChatGPT was used for medical suggestions, its responses have been typically incomplete or inaccurate, elevating main considerations round security and reliability.

Now, we all know most clinicians are already doing the best factor. Being cautious. Being considerate. Completely wanting to guard the affected person’s privateness and their very own peace of thoughts. So in the event you’re already enjoying it protected, wonderful. We’re simply right here to make that just a little simpler.

Let’s stroll by 10 particular practices to keep away from when utilizing ChatGPT (or any AI platform), with useful hyperlinks and context to make sense of the dangers.

Disclaimer: Whereas these are basic ideas, it is vital to conduct thorough analysis and due diligence when deciding on AI instruments. We don’t endorse or promote any particular AI instruments talked about right here. This text is for academic and informational functions solely. It’s not meant to supply authorized, monetary, or medical recommendation. All the time adjust to HIPAA and institutional insurance policies. For any choices that influence affected person care or funds, seek the advice of a professional skilled.

Prime 10 Absolute Don’ts for Docs Utilizing ChatGPT

1. Don’t put affected person information into ChatGPT with out a BAA

Even when ChatGPT is encrypted, that doesn’t imply it is licensed to deal with protected well being data (PHI). Underneath HIPAA, any vendor that handles PHI should have a Enterprise Affiliate Settlement (BAA) in place. ChatGPT doesn’t.

If you wish to use AI with affected person knowledge, solely use institution-approved instruments that meet HIPAA requirements, or totally de-identify first.

2. Don’t assume encryption makes a instrument HIPAA-compliant

It’s straightforward to imagine that encryption is sufficient, however even encrypted PHI continues to be PHI. Based on the HIPAA Safety Rule, encryption doesn’t remove your authorized obligation to guard affected person knowledge or your want for a BAA.

Guarantee any AI platform dealing with medical data shouldn’t be solely encrypted but additionally legally licensed to obtain it.

3. Don’t paste full affected person charts into ChatGPT

Sharing a whole affected person report with an AI instrument typically violates HIPAA’s Minimal Needed Rule, which requires that disclosures be restricted to the smallest quantity of data wanted.

As a substitute, extract solely what’s important, or summarize first and ask AI for assistance on language or construction, not content material.

4. Don’t depend on fast redactions and name it de-identified

HIPAA outlines two strategies for de-identification: professional dedication or Secure Harbor, which requires removing of 18 particular identifiers. Most fast redactions fall quick. Based on HHS steering, merely eradicating names or dates shouldn’t be sufficient.

Use correct instruments for de-identification, or keep away from getting into PHI altogether.

5. Don’t use ChatGPT to make medical choices you possibly can’t confirm

If an AI output can’t be totally defined, FAQs from the FDA on medical determination assist software program recommend it could be regulated as a medical gadget.

ChatGPT is greatest used for non-clinical duties: summaries, drafts, academic content material… not direct medical decision-making.

6. Don’t prescribe or handle meds by ChatGPT

Prescribing meds, particularly managed substances, requires safe, licensed methods. The DEA’s guidelines on digital prescriptions for managed substances (EPCS) lay out all of the safeguards, and ChatGPT isn’t compliant.

Use trusted, safe platforms constructed for prescribing, like DrFirst or SureScripts.

7. Don’t use AI to copy-paste or exaggerate documentation for billing

The OIG and CMS have flagged the follow of cloning or “copy-pasting” notes as a severe compliance challenge. In a single high-profile case, Somerset Cardiology Group paid over $422,000 after OIG discovered it cloned affected person progress notes and improperly billed Medicare based mostly on falsified documentation

Let AI allow you to define or format, however make certain the ultimate notice displays precise care supplied and your private medical judgment.

8. Don’t use AI in ways in which cross state licensure boundaries

Even when AI is concerned, delivering medical care to a affected person positioned in one other state nonetheless triggers that state’s licensure necessities. Based on the Heart for Related Well being Coverage (CCHP), care by way of telehealth is all the time thought of rendered on the affected person’s bodily location, which usually means the supplier have to be licensed there except exceptions apply.

When you’re utilizing AI to assist care, be sure to’re working towards inside your licensed jurisdictions, or maintain the output strictly academic and non-clinical.

9. Don’t blur boundaries with sufferers by AI

Even on-line, skilled tasks stay the identical. Annals of Inner Drugs reminds that boundaries between private {and professional} realms can simply blur on-line, and physicians ought to actively work to maintain them separate to take care of belief and moral requirements within the affected person–doctor relationship.

Keep away from utilizing AI in informal affected person chats or DMs. Persist with safe, formal communication platforms.

10. Don’t make deceptive AI-powered advertising and marketing claims

The FTC is stepping up towards misleading AI claims. In a 2025 enforcement effort, the company fined DoNotPay for advertising and marketing itself as “the world’s first robotic lawyer,” although its AI lacked satisfactory coaching to ship correct authorized recommendation.

Whereas that case concerned authorized companies, the message carries over to healthcare, the place the stakes are even greater. Keep away from obscure or inflated claims. Use sincere phrases like “AI-assisted” or “AI-enhanced,” and clearly clarify what AI does and what it doesn’t.

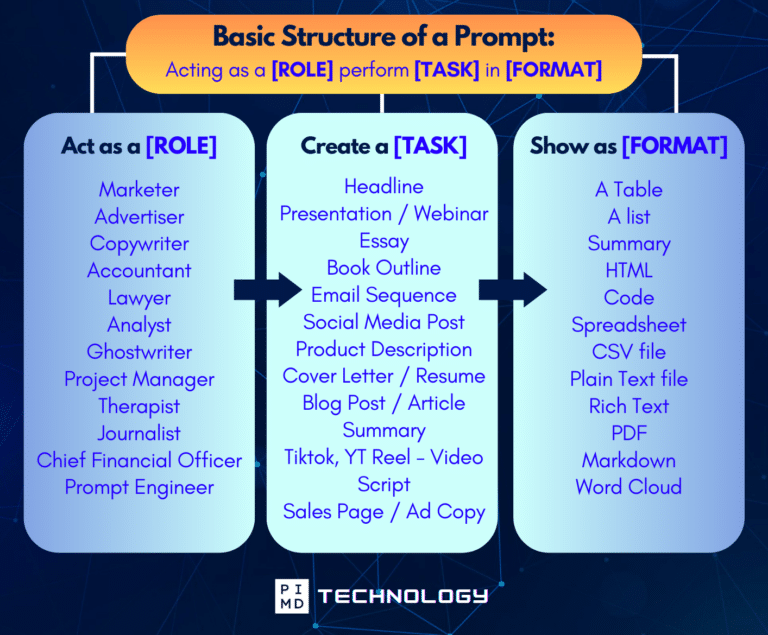

Unlock the Full Energy of ChatGPT With This Copy-and-Paste Immediate System!

Obtain the Full ChatGPT Cheat Sheet! Your go-to information to writing higher, quicker prompts in seconds. Whether or not you are crafting emails, social posts, or shows, simply comply with the method to get outcomes immediately.

Save time. Get readability. Create smarter.

Remaining Ideas: Do Your Due Diligence

AI is transferring quick. The instruments are highly effective, accessible, and actually… form of enjoyable to make use of. For many people, it looks like we’re standing on the sting of one thing game-changing in healthcare. And we’re.

However with that chance comes duty.

It’s straightforward to get caught up in what AI can do and neglect to pause and ask what it ought to do, particularly in delicate environments.

So this isn’t about worry or inflexible guidelines. It’s about consciousness. It’s about taking a beat to double-check and to lean on the sources round us once we’re uncertain. None of us is predicted to have each reply. That’s why authorized and compliance exist. They’re on our aspect.

Btw, this additionally isn’t authorized recommendation, or an alternative to your establishment’s insurance policies. It’s only a useful nudge, a shared reminder as all of us attempt to navigate this tech thoughtfully and responsibly. Do your personal diligence as all the time.

Right here’s a fast guidelines we’ve discovered helpful to maintain shut:

✅ Default to de-identify or don’t share.

✅ Choose institution-approved AI with a BAA and correct admin/technical safeguards.

✅ All the time apply clinician oversight and doc your judgment.

✅ When unsure, verify with privateness, compliance, or authorized.

We’re studying collectively. So let’s maintain asking the best questions, difficult assumptions, and constructing habits that maintain us protected.

If you wish to study extra about AI and different cool AI instruments, make certain to subscribe to our e-newsletter! We even have a free AI useful resource web page the place we share the most recent suggestions, methods, and information that can assist you take advantage of know-how.

To go deeper, take a look at PIMDCON 2025 — The Doctor Actual Property & Entrepreneurship Convention. You’ll acquire real-world methods from medical doctors who’re efficiently integrating AI and enterprise for large outcomes.

See you once more subsequent time! As all the time, make it occur.

Disclaimer: The knowledge supplied right here relies on obtainable public knowledge and is probably not solely correct or up-to-date. It is really useful to contact the respective firms/people for detailed data on options, pricing, and availability. This text is for academic and informational functions solely. It’s not meant to supply authorized, monetary, or medical recommendation. All the time adjust to HIPAA and institutional insurance policies. For any choices that influence affected person care or funds, seek the advice of a professional skilled.

IF YOU WANT MORE CONTENT LIKE THIS, MAKE SURE YOU SUBSCRIBE TO OUR NEWSLETTER TO GET UPDATES ON THE LATEST TRENDS FOR AI, TECH, AND SO MUCH MORE.

Peter Kim, MD is the founding father of Passive Earnings MD, the creator of Passive Actual Property Academy, and gives weekly training by his Monday podcast, the Passive Earnings MD Podcast. Be a part of our neighborhood on the Passive Earnings Doc Fb Group.

Additional Studying